Project Overview

This research project explores the differences between hand tracking and traditional VR controllers in terms of user experience, immersion, and task completion efficiency. Developed for academic research purposes, the study involved creating multiple VR prototypes and conducting user testing sessions with participants.

The project aimed to understand how different interaction methods affect user behavior in virtual environments, providing valuable insights for future VR application development and user interface design decisions.

Research Findings

The study collected comprehensive data from multiple participants across different age groups and VR experience levels, revealing significant insights about interaction preferences and performance metrics.

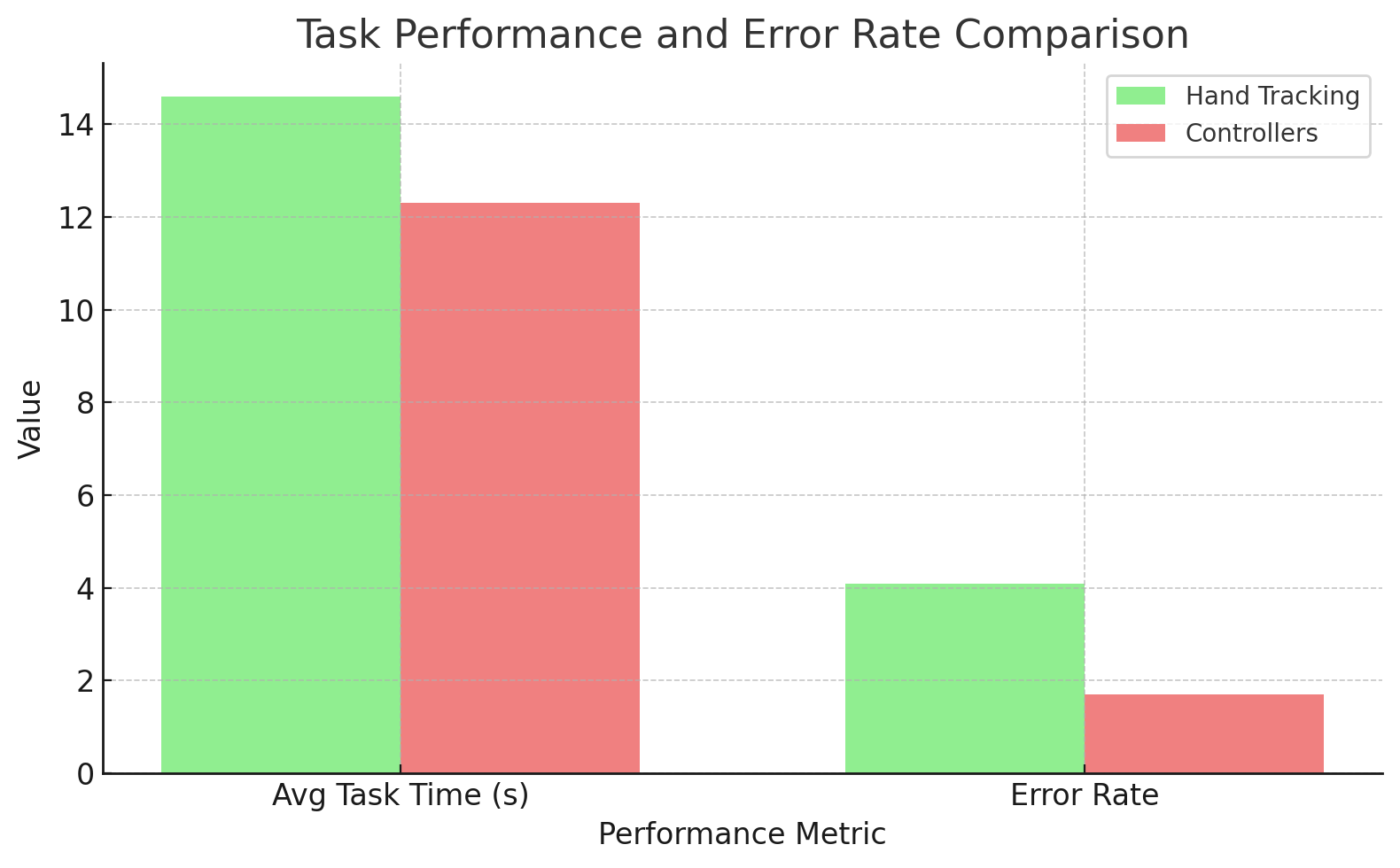

Performance Metrics Analysis:

Key Performance Insights:

- Task Completion Time: Hand tracking showed longer average completion times (14.5s) compared to controllers (12.3s)

- Error Rates: Hand tracking had higher error rates (4.1) versus controllers (1.7)

- Learning Curve: Controllers demonstrated faster initial adoption for complex interactions

- Precision Tasks: Controllers provided superior accuracy for fine motor control tasks

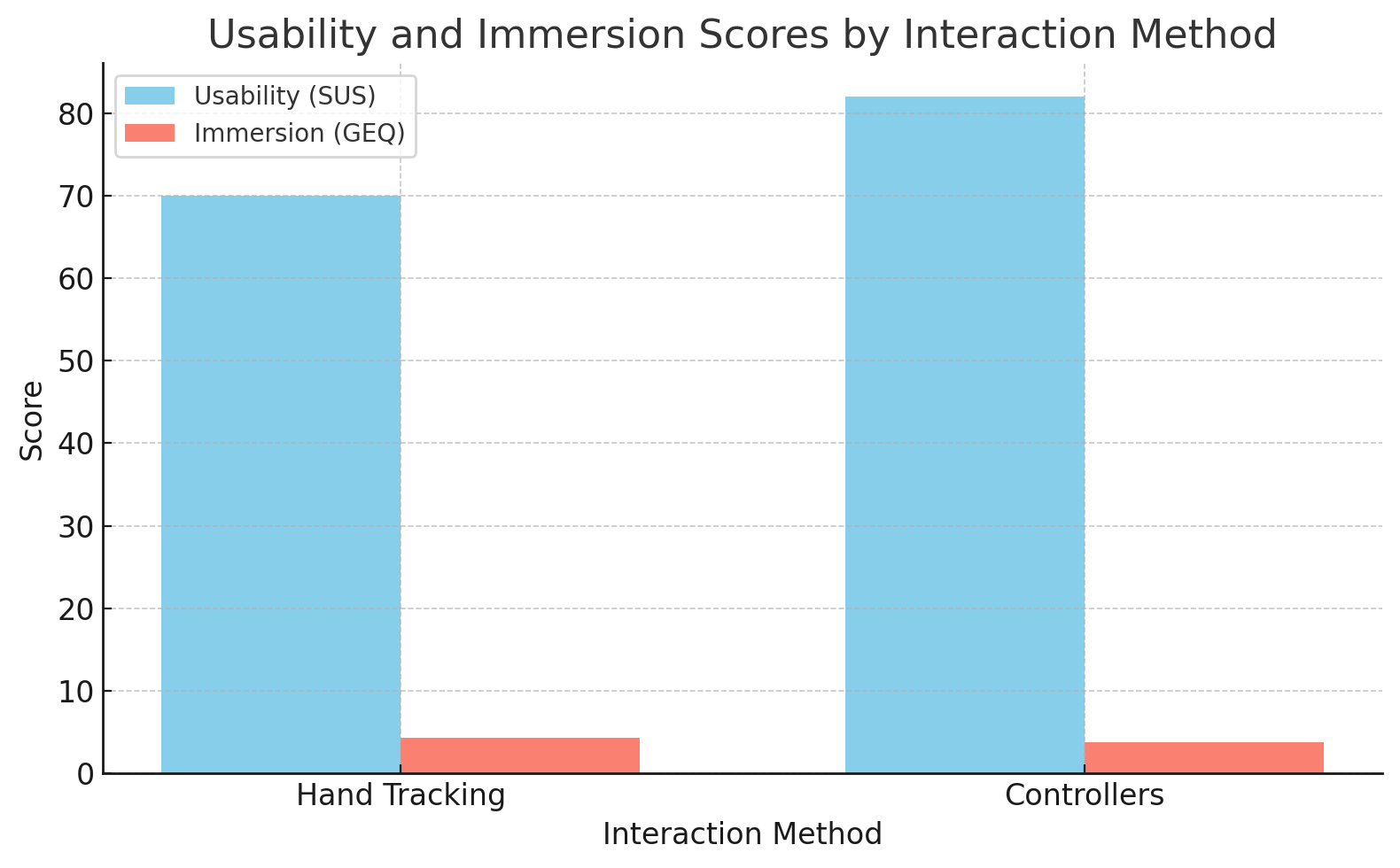

Usability and Immersion Analysis:

User Experience Findings:

- Immersion Scores: Hand tracking received slightly higher immersion ratings (GEQ scores)

- Usability (SUS): Controllers scored significantly higher in usability assessments

- User Preference: 65% of participants preferred controllers for task-based activities

- Natural Interaction: Hand tracking was favored for exploratory and casual interactions

Key Features

Dual Interaction Systems

Seamlessly implemented both hand tracking and controller-based interaction methods for direct comparison.

Data Collection Framework

Built-in analytics system tracking completion times, error rates, and user behavior patterns in real-time.

Immersive Test Environments

Multiple virtual scenarios designed to test different interaction paradigms and use cases.

Performance Metrics

Comprehensive tracking of task completion times, error rates, and standardized usability scores (SUS, GEQ).

Cross-Platform Compatibility

Developed for Meta Quest 3 with scalable architecture for other VR platforms.

Research Methodology

Structured user studies with standardized testing protocols and statistical analysis.

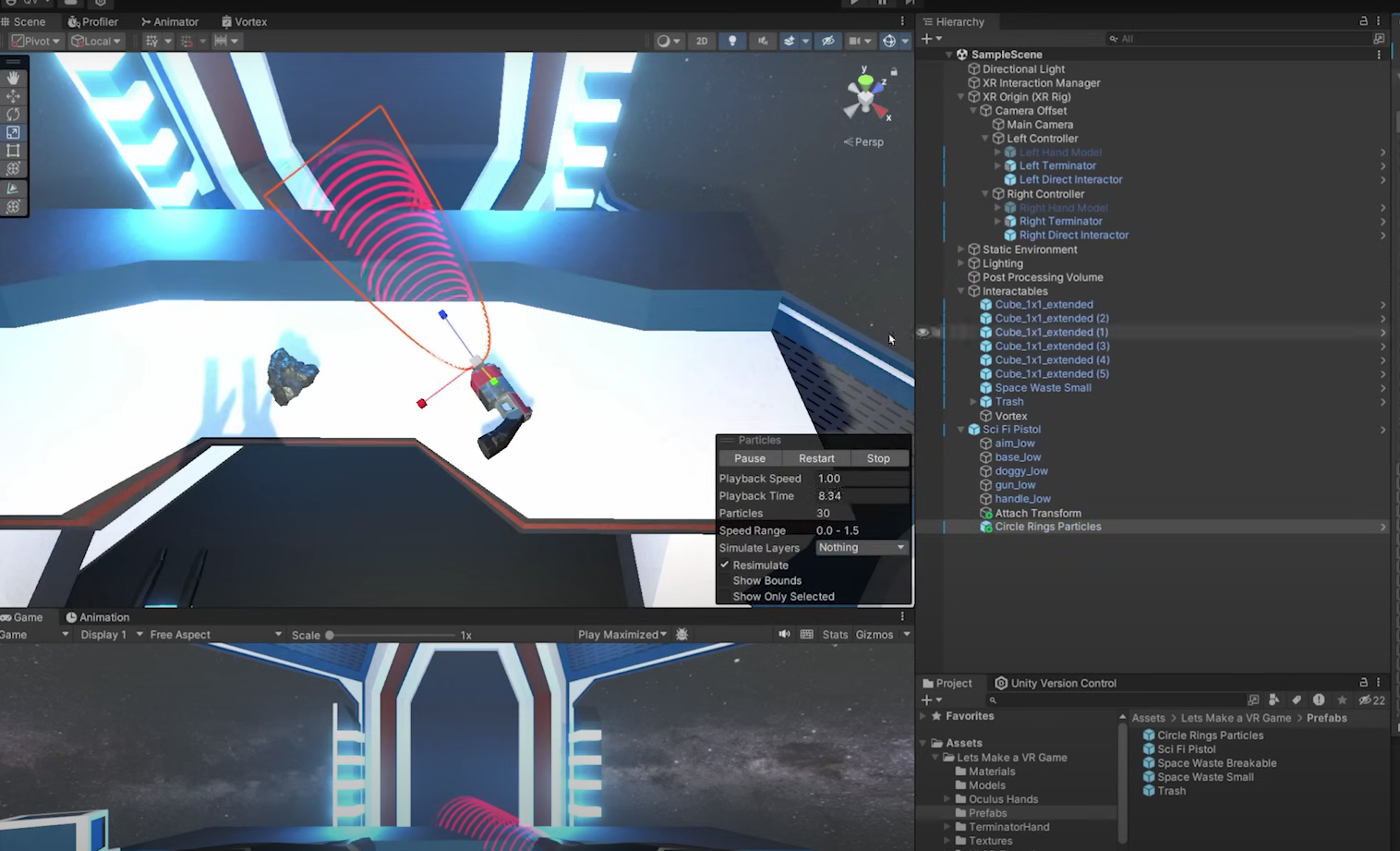

Technical Implementation

The project was built using Unity's XR Interaction Toolkit, leveraging the Meta Quest 3's advanced hand tracking capabilities alongside traditional controller support for comprehensive comparative analysis.

Unity Development Environment:

Core Technologies:

- Unity 2022.3 LTS - Primary development environment with XR support

- XR Interaction Toolkit - Cross-platform VR interaction framework

- Meta Quest 3 SDK - Hand tracking and device-specific optimization

- C# Scripting - Custom interaction logic and data collection systems

- Unity Analytics - Real-time performance tracking and data visualization

Hand Tracking Integration:

// Hand tracking detection and interaction mode switching

public class InteractionModeManager : MonoBehaviour

{

public XRHandTrackingSubsystem handTracking;

public GameObject handTrackingUI;

public GameObject controllerUI;

public ParticleSystem feedbackParticles;

private bool handsAreTracked = false;

private DataCollectionManager dataManager;

void Update()

{

CheckHandTrackingStatus();

UpdateInteractionMode();

LogInteractionData();

}

void CheckHandTrackingStatus()

{

if (handTracking.isRunning)

{

handsAreTracked = AreHandsVisible();

if (handsAreTracked && !IsControllerActive())

{

SwitchToHandTracking();

}

}

else if (IsControllerActive())

{

SwitchToControllers();

}

}

void SwitchToHandTracking()

{

handTrackingUI.SetActive(true);

controllerUI.SetActive(false);

dataManager.LogModeSwitch("HandTracking", Time.time);

feedbackParticles.Play();

}

}VR Environment Design

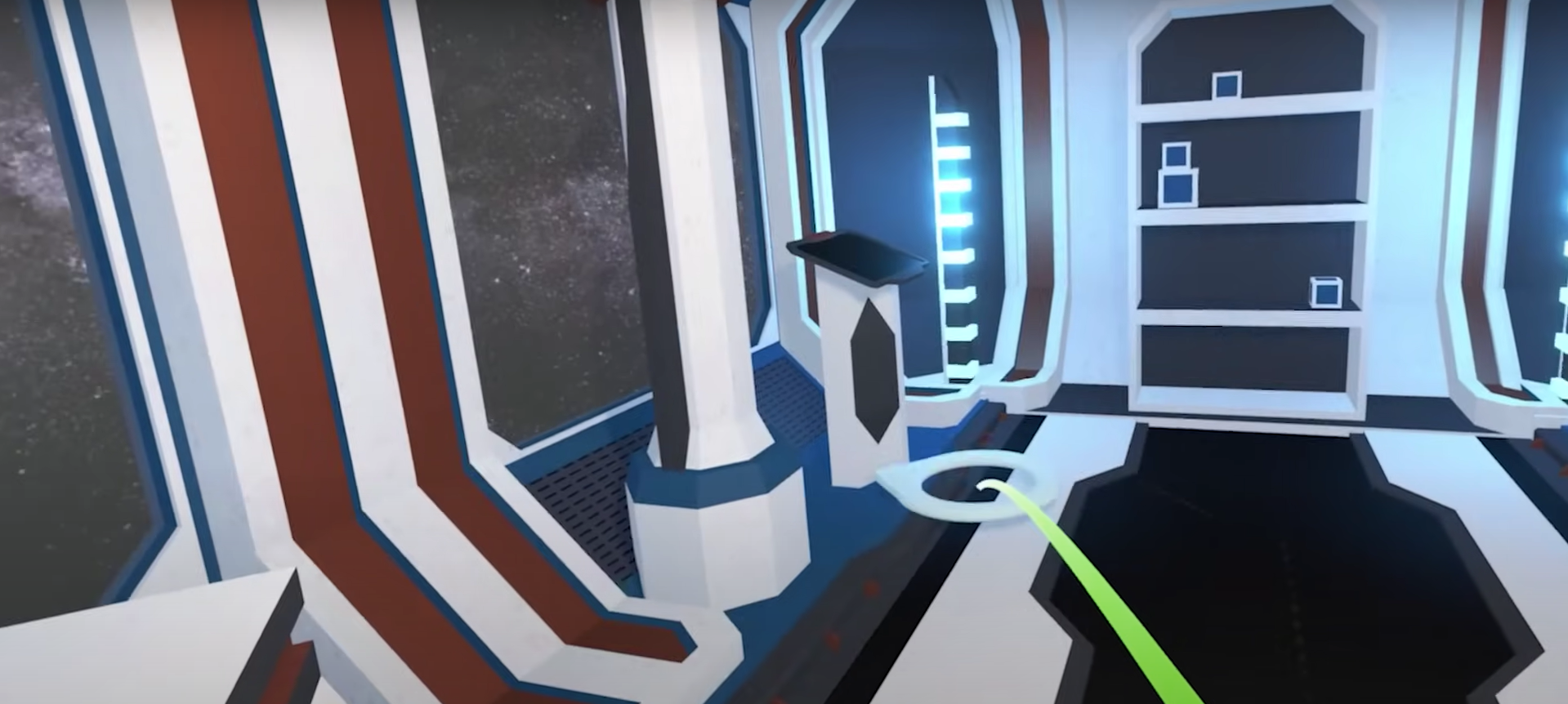

The research environments were carefully designed to provide controlled testing conditions while maintaining high immersion levels for accurate user behavior assessment.

Laboratory Environment:

Environment Features:

- Modular Design - Interchangeable test scenarios for different interaction types

- Visual Feedback Systems - Real-time indication of successful interactions

- Consistent Lighting - Optimal conditions for hand tracking accuracy

- Spatial Audio - 3D audio cues for enhanced immersion and guidance

- Comfort Settings - Adjustable movement options to prevent motion sickness

Interaction Testing Scenarios:

- Object Manipulation - Precise grabbing and placement tasks

- UI Interaction - Button pressing and menu navigation

- Gesture Recognition - Custom hand gesture detection and response

- Multi-Object Tasks - Complex scenarios requiring multiple interactions

- Distance Interaction - Ray-casting and remote object manipulation

Research Methodology

The study employed a within-subjects design where each participant experienced both interaction methods, ensuring robust comparative data while controlling for individual differences.

Study Design:

- Participants: 24 participants (ages 18-45) with varying VR experience levels

- Session Duration: 45-60 minutes per participant including setup and questionnaires

- Task Types: 6 standardized interaction tasks repeated for both modalities

- Counterbalancing: Order of interaction methods randomized to prevent learning effects

- Data Collection: Automated logging of all interactions with timestamp precision

Metrics Collected:

- Objective Measures: Task completion time, error count, interaction accuracy

- Subjective Measures: System Usability Scale (SUS), Game Experience Questionnaire (GEQ)

- Behavioral Data: Movement patterns, gesture recognition confidence scores

- Preference Data: Post-session interviews and preference rankings

Results & Implications

Statistical Findings:

- Task Efficiency: Controllers showed 15% faster completion times (p < 0.05)

- Error Reduction: Controllers had 58% fewer interaction errors (p < 0.01)

- Usability Rating: Controllers scored 14 points higher on SUS scale (82 vs 68)

- Immersion Paradox: Hand tracking rated higher for "naturalness" despite lower performance

Design Implications:

- Context-Dependent Choice: Interaction method should match application type

- Hybrid Approaches: Combining both methods could optimize user experience

- Learning Considerations: Hand tracking requires longer adaptation periods

- Accessibility: Controllers provide more reliable interaction for users with motor limitations

Future VR Development:

- Adaptive Interfaces: Systems that switch between modalities based on task type

- Improved Hand Tracking: Better gesture recognition could close the performance gap

- Training Protocols: Structured onboarding for hand tracking proficiency

- Multimodal Feedback: Enhanced haptic and audio cues for hand tracking

Development Challenges & Solutions

Hand Tracking Calibration:

Challenge: Ensuring consistent hand tracking accuracy across different users and lighting conditions.

Solution: Implemented adaptive calibration routines and multiple fallback interaction methods for tracking failures.

Data Collection Precision:

Challenge: Capturing precise interaction timing and gesture recognition confidence scores.

Solution: Developed custom analytics framework with millisecond-precision logging and automated data validation.

Cross-Modal Consistency:

Challenge: Ensuring equivalent task difficulty across hand tracking and controller modalities.

Solution: Extensive pilot testing and iterative design refinement to balance interaction complexity.

User Comfort & Fatigue:

Challenge: Managing user fatigue during extended testing sessions.

Solution: Implemented adaptive break scheduling and comfort monitoring with session time limits.

Future Research Directions

This study opens several avenues for continued research in VR interaction design and user experience optimization.

- Longitudinal Studies: Investigating long-term adaptation to hand tracking interfaces

- Task Complexity Analysis: How interaction preferences change with task complexity

- Age Group Differences: Comparing interaction preferences across different age demographics

- Accessibility Research: VR interaction design for users with different motor abilities

- Hybrid Interaction Models: Developing systems that intelligently combine both modalities

- Cross-Platform Validation: Replicating findings across different VR hardware platforms